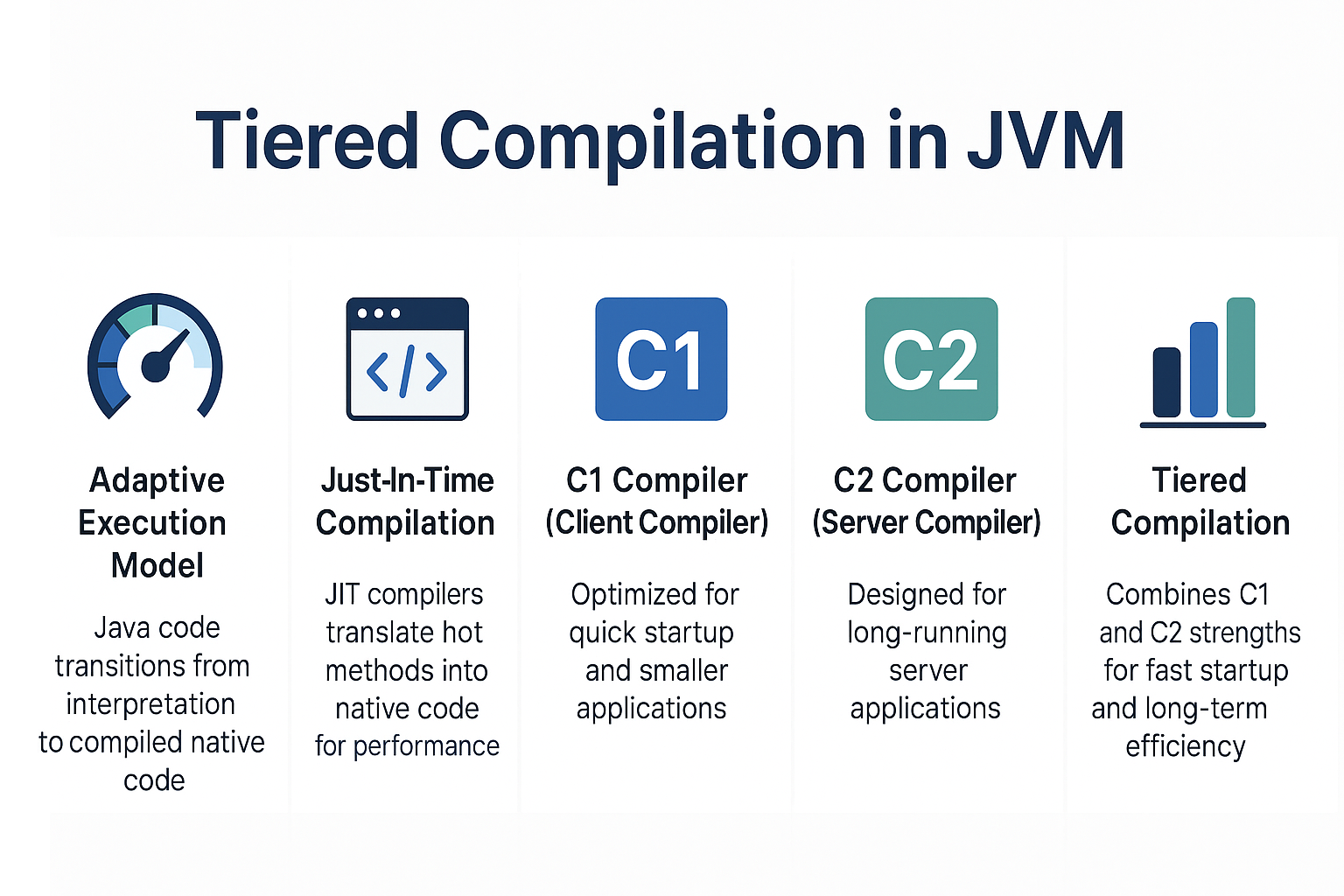

One of the JVM’s biggest strengths is its adaptive execution model. Java code starts with interpretation and gradually transitions into compiled native code for performance. This process is driven by Just-In-Time (JIT) compilation, which uses two key compilers in the HotSpot JVM: C1 (client compiler) and C2 (server compiler).

Since Java 8, the JVM has adopted tiered compilation, which combines the strengths of both C1 and C2. This hybrid approach balances fast startup times with long-term performance gains, making Java applications efficient for both short-lived tools and long-running enterprise services.

In this tutorial, we’ll explore the differences between C1 and C2 compilers, how tiered compilation works, and best practices for tuning it in production.

The Role of JIT in JVM

- Interpreter: Executes bytecode line by line; fast startup but slower execution.

- JIT Compilers (C1 & C2): Translate hot methods into native code for faster execution.

- Tiered Compilation: Bridges the gap between quick startup and peak performance.

C1 Compiler (Client Compiler)

- Optimized for quick startup and smaller applications.

- Performs lightweight optimizations.

- Used in desktop, GUI, and short-lived applications.

Key Features

- Fast compilation, minimal overhead.

- Basic optimizations (inlining, constant folding).

- Works with profiling to gather runtime data for C2.

C2 Compiler (Server Compiler)

- Designed for long-running server applications.

- Performs aggressive optimizations based on runtime profiling.

Key Features

- Advanced optimizations: escape analysis, loop unrolling, intrinsics.

- Produces highly optimized machine code.

- Higher compilation overhead, but better runtime performance.

Tiered Compilation: Combining C1 and C2

How It Works

- Interpretation Phase → Bytecode runs under the interpreter.

- C1 Compilation → Hot methods are compiled quickly with lightweight optimizations.

- Profiling → C1 gathers detailed runtime data (branch frequencies, inlining opportunities).

- C2 Compilation → Re-compiles hot methods with aggressive optimizations using profiling data.

Benefits

- Fast startup (thanks to C1).

- Long-term efficiency (thanks to C2).

- Adaptive optimizations based on actual workload.

Example: Tiered Compilation in Action

public class TieredCompilationExample {

public static int compute(int x) {

return x * 2;

}

public static void main(String[] args) {

for (int i = 0; i < 1_000_000; i++) {

compute(i);

}

}

}

- Initially interpreted.

- C1 compiles

compute()quickly. - C2 recompiles it with optimizations after profiling data is collected.

Monitoring Tiered Compilation

Enable Compilation Logs

-XX:+UnlockDiagnosticVMOptions -XX:+PrintCompilation -XX:+PrintTieredEvents

Using JFR + Mission Control

- Track which methods are compiled by C1 vs C2.

- Detect frequent de-optimizations.

- Analyze warm-up performance.

Real-World Case Study

Scenario: A financial trading system experienced high latency during startup.

- Diagnosis: Startup was delayed by JIT optimizations.

- Solution: Enabled tiered compilation so that C1 handled initial compilation, with C2 taking over later.

- Result: Startup latency reduced by 50%, while long-term performance remained optimal.

Pitfalls and Troubleshooting

- Warm-up overhead: Methods may be compiled multiple times.

- De-optimization: Incorrect assumptions force fallback to interpreter.

- Disabling tiered compilation (

-XX:-TieredCompilation) can hurt startup or long-term performance. - Over-tuning: Avoid unnecessary JIT flags unless profiling demands it.

Best Practices

- Keep tiered compilation enabled by default.

- Warm up services in staging environments before production traffic.

- Use JFR and GC logs to correlate compilation with GC behavior.

- Avoid disabling tiered compilation unless diagnosing JIT issues.

JVM Version Tracker

- Java 8: Tiered compilation enabled by default.

- Java 11: Further improvements in tiered compilation heuristics.

- Java 17: ZGC/Shenandoah stable, integrated well with JIT.

- Java 21+: Project Lilliput optimizations, NUMA-aware GC with adaptive JIT tuning.

Conclusion & Key Takeaways

- C1: Quick startup, lightweight optimizations.

- C2: Aggressive optimizations for long-running apps.

- Tiered Compilation: Combines both for the best of startup and runtime performance.

- Monitoring and tuning tiered compilation ensures balanced performance for modern Java workloads.

FAQ

1. What is the JVM memory model and why does it matter?

It governs how threads interact with memory, ensuring JIT-compiled code remains consistent.

2. How does G1 GC differ from CMS?

G1 is region-based with compaction; CMS was prone to fragmentation.

3. When should I use ZGC or Shenandoah?

When ultra-low-latency is required with very large heaps.

4. What are JVM safepoints and why do they matter?

They are pause points allowing GC and JIT to run safely.

5. How do I solve OutOfMemoryError in production?

Use GC logs, heap dumps, adjust -Xmx, and fix leaks.

6. What are the trade-offs of throughput vs latency tuning?

Throughput prioritizes raw speed, latency prioritizes predictable response times.

7. How do I read and interpret GC logs?

Look at heap before/after sizes, pause durations, and GC types.

8. How does JIT compilation optimize performance?

By compiling hot code paths to native code, eliminating interpreter overhead.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller object headers improve memory efficiency and JIT effectiveness.

10. How does GC differ in microservices vs monoliths?

Microservices often need consistent latency, while monoliths focus on throughput.