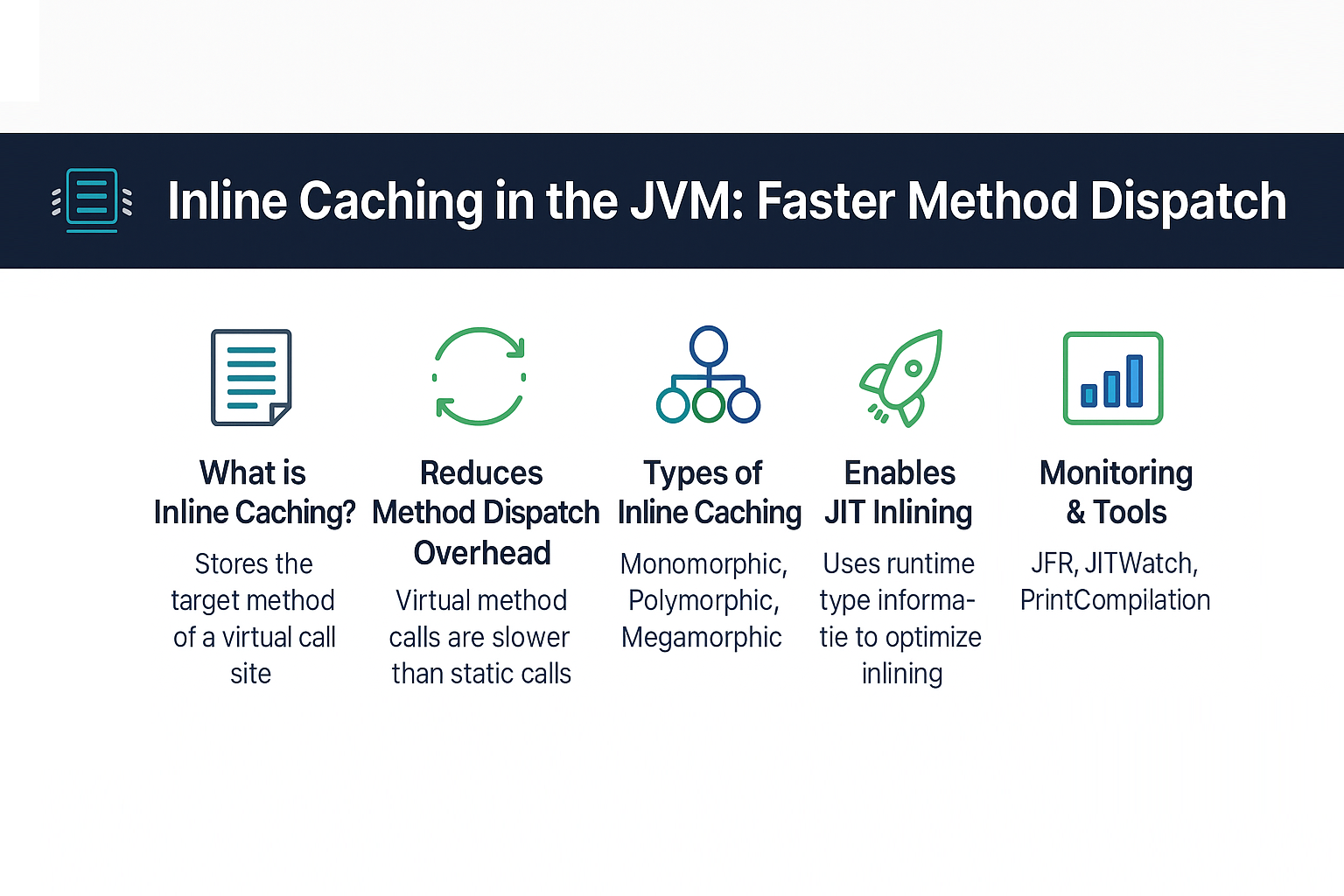

Java is an object-oriented language, and method calls are often virtual (determined at runtime). While this provides flexibility and polymorphism, it also introduces method dispatch overhead. To optimize this, the HotSpot JVM uses Inline Caching (IC)—a JIT compiler technique that makes virtual calls nearly as fast as direct calls.

Inline caching is a cornerstone of JIT optimization, enabling faster method dispatch, better inlining decisions, and reduced interpreter overhead. This tutorial explores inline caching in depth, including monomorphic, polymorphic, and megamorphic caching, with real-world examples.

What is Inline Caching?

- Definition: Inline caching stores the target method of a virtual call site after the first execution, avoiding repeated dynamic lookups.

- Goal: Speed up method dispatch by leveraging runtime type information.

- Mechanism: The JVM records the receiver type at a call site and caches the resolved method.

Why Inline Caching Matters

- Virtual method calls are slower than static calls.

- Inline caching reduces lookup overhead.

- Enables JIT to inline hot methods.

- Improves overall application throughput.

Analogy: Instead of asking directions every time you drive to the store, you remember the route after the first trip—making future trips faster.

Types of Inline Caching

1. Monomorphic Inline Cache

- Scenario: Call site always sees the same receiver type.

- Optimization: Cache directly points to resolved method.

- Example:

class Animal { void speak() { System.out.println("Animal"); } }

class Dog extends Animal { void speak() { System.out.println("Dog"); } }

Animal a = new Dog();

a.speak(); // Monomorphic if always Dog

2. Polymorphic Inline Cache

- Scenario: Call site sees a small set of receiver types.

- Optimization: JVM maintains a small cache of types.

- Example:

Animal a = condition ? new Dog() : new Cat();

a.speak(); // Polymorphic if Dog or Cat

3. Megamorphic Inline Cache

- Scenario: Call site sees many types.

- Optimization: Falls back to generic lookup.

- Example: Reflection-heavy or dynamic proxy-based code.

Inline Caching and JIT Compilation

- Interpreter: Records type feedback at call sites.

- C1 Compiler: Uses inline caches to optimize method calls.

- C2 Compiler (or Graal JIT): Inlines monomorphic calls, optimizes polymorphic calls.

- Deoptimization: If assumptions break (new receiver type), the JVM deoptimizes and rebuilds the cache.

Example with Deoptimization

public class InlineCacheExample {

static class Animal { void speak() { System.out.println("Animal"); } }

static class Dog extends Animal { void speak() { System.out.println("Dog"); } }

static class Cat extends Animal { void speak() { System.out.println("Cat"); } }

public static void main(String[] args) {

Animal a = new Dog();

for (int i = 0; i < 1_000_000; i++) {

a.speak(); // Monomorphic → optimized

}

a = new Cat(); // Triggers deoptimization → Polymorphic

a.speak();

}

}

Monitoring Inline Caching

JVM Flags

-XX:+UnlockDiagnosticVMOptions -XX:+PrintCompilation

-XX:+UnlockDiagnosticVMOptions -XX:+PrintInlining

Tools

- JFR + Mission Control: Track inlining decisions.

- JITWatch: Visualize method inlining and inline caching behavior.

Real-World Case Studies

Case 1: REST API Framework

- Issue: Excessive virtual method calls in request pipeline.

- Solution: Inline caching + JIT inlined hot methods.

- Result: 20% latency reduction.

Case 2: Financial Analytics Engine

- Issue: Reflection-heavy dynamic dispatch.

- Solution: Replaced with stable polymorphic call sites.

- Result: Reduced megamorphic overhead, improved throughput.

Pitfalls and Troubleshooting

- Megamorphic Sites: Inline caching ineffective with too many types.

- Deoptimization Overhead: Frequent type changes trigger rollbacks.

- Dynamic Frameworks: Hibernate/Spring proxies often create polymorphic or megamorphic sites.

- Hard to Detect: Requires JFR or JITWatch for visibility.

Best Practices

- Favor monomorphic call sites where possible.

- Avoid excessive reflection/dynamic proxies in hot paths.

- Use

finalorsealedclasses to stabilize call sites. - Profile with JFR/JITWatch before tuning.

- Benchmark before disabling inlining or JIT optimizations.

JVM Version Tracker

- Java 8: Mature inline caching and C2 optimizations.

- Java 11: G1 GC default, Graal JIT experimental.

- Java 17: Graal JIT stable, better inline caching decisions.

- Java 21+: Project Lilliput + JIT improvements enhance IC efficiency.

Conclusion & Key Takeaways

- Inline caching accelerates method dispatch by caching type-to-method mappings.

- Monomorphic call sites are most optimized; polymorphic handled efficiently; megamorphic sites degrade performance.

- Critical for JIT inlining and overall JVM performance.

- Developers should profile and design class hierarchies to maximize IC effectiveness.

FAQ

1. What is the JVM memory model and why does it matter?

It ensures correctness when inline caches optimize virtual calls across threads.

2. How does G1 GC differ from CMS?

G1 compacts memory regions; CMS could fragment old gen.

3. When should I use ZGC or Shenandoah?

For ultra-low-latency workloads where safepoints and deopts matter.

4. What are JVM safepoints and why do they matter?

Required for cache invalidation and deoptimization.

5. How do I solve OutOfMemoryError in production?

Check heap usage, GC logs, and inline cache effectiveness.

6. What are the trade-offs of throughput vs latency tuning?

Throughput may allow polymorphic caches; latency-sensitive apps need monomorphic.

7. How do I read and interpret GC logs?

Look at allocation pressure, pause times, and safepoint interactions.

8. How does JIT compilation optimize performance?

By using inline caching to enable method inlining and speculative optimizations.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller headers improve memory layout, helping IC/JIT efficiency.

10. How does GC differ in microservices vs monoliths?

Microservices require predictable latency; monoliths emphasize throughput.