High-throughput web applications such as e-commerce platforms, streaming services, and social networks serve millions of requests per second. While latency is important, the primary goal is throughput—processing the maximum number of requests efficiently.

In such environments, Garbage Collection (GC) tuning plays a critical role. Poorly tuned GC can reduce request-per-second capacity, increase CPU load, and cause unpredictable response times. This case study explores real-world GC tuning strategies for high-throughput applications.

JVM and GC in High-Throughput Systems

- JVM Advantage: Managed memory and portability.

- Challenge: GC must handle large allocation rates without harming throughput.

- Trade-Off: Minor pauses are acceptable, but long pauses reduce efficiency.

Analogy: Think of a busy restaurant—throughput is about serving as many customers as possible. GC is like cleaning tables. If cleaning is slow, customers wait longer, reducing capacity.

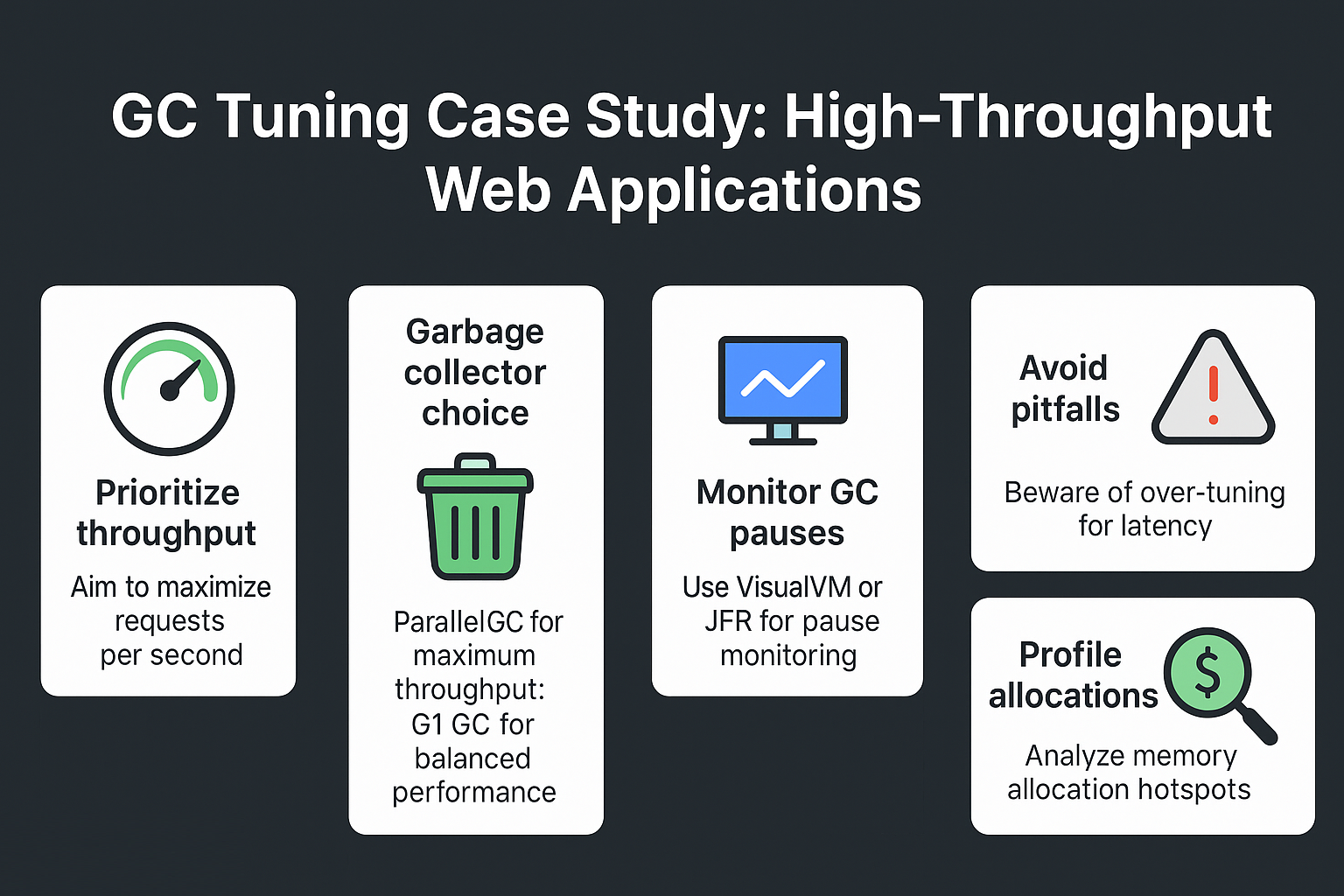

Garbage Collector Choices for Throughput

Parallel GC

- Strengths: Maximizes throughput with multi-threaded collection.

- Weaknesses: Stop-the-world pauses may be long.

- Use Case: Batch jobs, request-heavy apps tolerant to pauses.

- Enable with:

-XX:+UseParallelGC

G1 GC (Default in Java 11+)

- Strengths: Region-based, predictable performance.

- Weaknesses: More CPU overhead than Parallel.

- Use Case: Balanced workloads needing throughput + latency predictability.

- Enable with:

-XX:+UseG1GC

ZGC / Shenandoah

- Strengths: Ultra-low latency, large heap support.

- Weaknesses: Extra CPU cost, not always required for throughput-first apps.

- Use Case: High throughput with strict SLAs on latency consistency.

- Enable with:

-XX:+UseZGC

Case Study: High-Throughput Web Platform

System Requirements

- SLA: 10k requests per second.

- Heap: 16 GB.

- Traffic: Burst-heavy (flash sales, promotions).

Initial Setup

- JVM Default: G1 GC.

- Observed: Throughput capped at 8k req/s.

Step 1: Parallel GC for Throughput

-XX:+UseParallelGC -Xms16g -Xmx16g

- Result: Increased throughput to 11k req/s.

- Trade-Off: Pauses reached 300ms during bursts.

Step 2: Optimize with G1 GC

-XX:+UseG1GC -Xms16g -Xmx16g -XX:MaxGCPauseMillis=200

- Result: Throughput ~10.5k req/s, pauses <200ms.

- Balanced throughput and latency.

Step 3: Testing with ZGC

-XX:+UseZGC -Xms16g -Xmx16g

- Result: Stable latency <10ms.

- Trade-Off: CPU overhead increased 15%.

Monitoring and Tools

- VisualVM & JFR: GC pause monitoring.

- Mission Control: Allocation and request correlation.

- Async Profiler: Detect allocation hotspots.

Pitfalls in High-Throughput GC Tuning

- Over-Tuning for Latency: Reduces throughput.

- Ignoring Allocation Pressure: Object churn bottlenecks GC.

- Framework Effects: ORM frameworks can generate unnecessary garbage.

- Failure to Load-Test: GC must be tuned under realistic production load.

Best Practices for High-Throughput GC

- Start with G1 GC (default).

- Use Parallel GC for maximum throughput, if pauses are tolerable.

- Test ZGC/Shenandoah if latency SLAs are strict.

- Always benchmark under peak load.

- Profile allocation-heavy code paths.

JVM Version Tracker

- Java 8: Parallel GC common, CMS for latency-sensitive apps.

- Java 11: G1 GC default.

- Java 17: ZGC and Shenandoah production-ready.

- Java 21+: NUMA-aware GC and Project Lilliput optimizations.

Conclusion & Key Takeaways

- High-throughput apps prioritize request-per-second efficiency.

- Parallel GC maximizes throughput but causes long pauses.

- G1 GC balances throughput and latency.

- ZGC ensures stable latency at higher CPU cost.

- Always profile and benchmark with production traffic.

FAQ

1. What is the JVM memory model and why does it matter?

It ensures thread safety and correctness while GC optimizations run.

2. How does G1 GC differ from CMS?

G1 compacts memory regions; CMS left fragmentation.

3. When should I use ZGC or Shenandoah?

When predictable latency is as important as throughput.

4. What are JVM safepoints and why do they matter?

GC relies on safepoints to pause threads consistently.

5. How do I solve OutOfMemoryError in production?

Analyze GC logs, tune heap size, and reduce allocations.

6. What are the trade-offs of throughput vs latency tuning?

Throughput tuning maximizes efficiency, latency tuning reduces spikes.

7. How do I read and interpret GC logs?

Check pause duration, heap before/after GC, and frequency.

8. How does JIT compilation optimize performance?

By inlining hot methods, enabling faster execution paths.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller object headers improve heap density and GC speed.

10. How does GC differ in microservices vs monoliths?

Microservices need predictable latency; monoliths optimize for throughput.