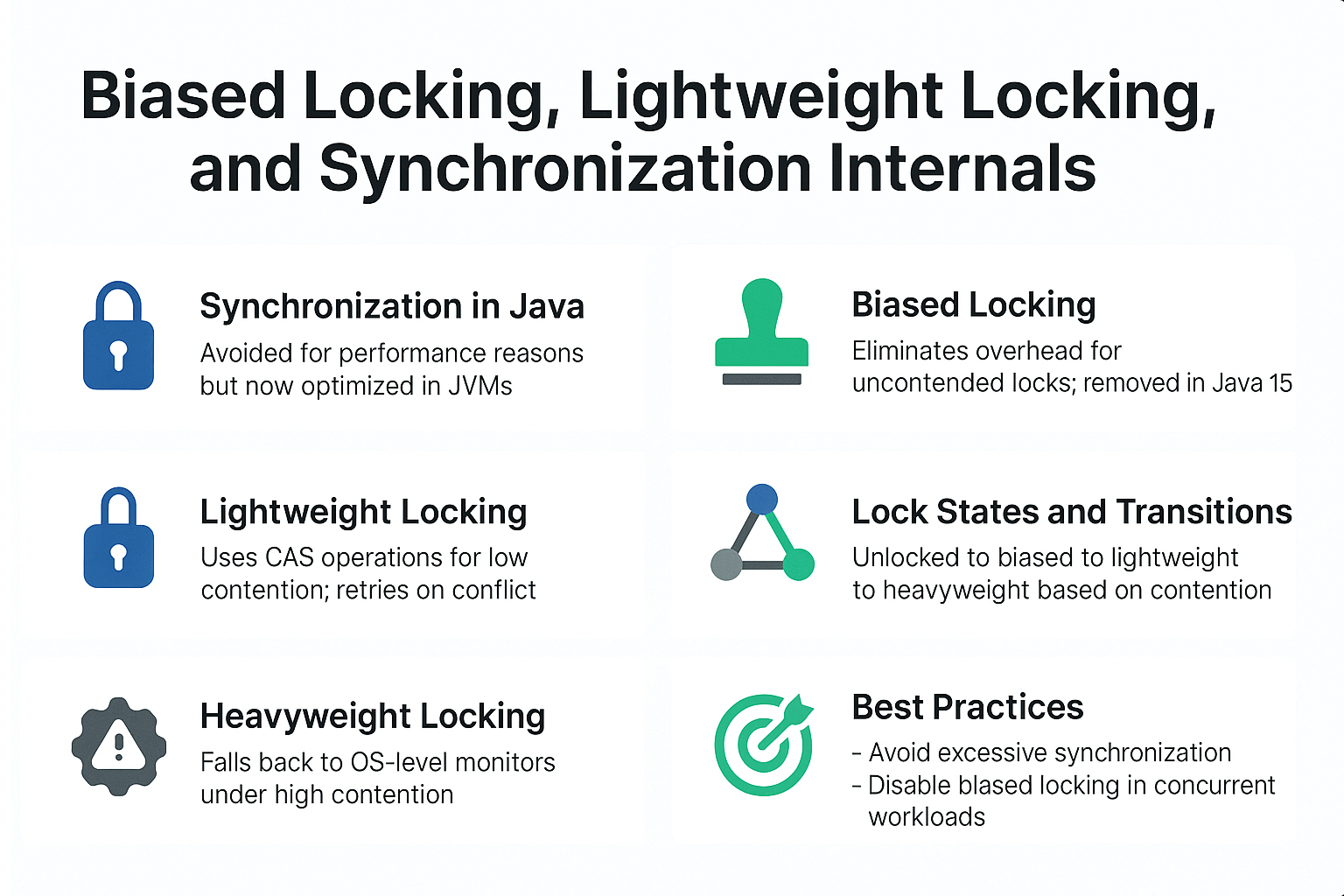

Synchronization in Java has long been considered expensive, leading developers to avoid synchronized blocks in performance-critical code. However, modern JVMs employ sophisticated locking mechanisms—including biased locking, lightweight locking, and heavyweight (OS) locking—to minimize synchronization costs.

The HotSpot JVM dynamically adapts locking strategies based on runtime conditions, ensuring low-latency synchronization for uncontended locks while maintaining correctness under heavy contention. This tutorial explains how synchronization works in the JVM, the role of biased and lightweight locks, and how to tune synchronization for real-world applications.

Synchronization in JVM Internals

Object Header and Mark Word

Every Java object contains a header, which includes:

- Mark Word: Stores lock state, hashcode, and GC metadata.

- Class Pointer: Points to class metadata.

The Mark Word is central to synchronization, as it encodes lock states and transitions.

Types of Locks in JVM

1. Biased Locking

- Goal: Eliminate synchronization overhead for uncontended locks.

- Mechanism: If a thread acquires a lock, the lock is biased toward that thread. Future lock acquisitions by the same thread skip synchronization.

- When Used: Single-threaded or mostly uncontended scenarios.

- Cost: Revoking bias requires a safepoint, adding overhead.

Flag:

-XX:+UseBiasedLocking

(Enabled by default in Java 8, removed in Java 15.)

2. Lightweight Locking (Thin Locks)

- Goal: Fast synchronization under low contention.

- Mechanism: Uses CAS (Compare-And-Swap) operations instead of OS-level monitors.

- When Used: When multiple threads attempt the same lock with minimal contention.

- Cost: CAS retries under contention.

3. Heavyweight Locking (Monitors)

- Goal: Handle high contention safely.

- Mechanism: Falls back to OS mutex/monitor. Threads block at OS level.

- When Used: High contention across threads.

- Cost: High latency due to kernel involvement.

Lock States and Transitions

-

Unlocked → Biased Lock

First thread acquires lock, bias stored in Mark Word. -

Biased Lock → Lightweight Lock

Another thread attempts lock → bias revoked → CAS used. -

Lightweight Lock → Heavyweight Lock

CAS fails due to contention → OS monitor engaged.

Analogy:

- Biased Lock: Key permanently given to one user.

- Lightweight Lock: Key on the table, first to grab gets it.

- Heavyweight Lock: Security guard hands out key, causing delays.

Example: Synchronization in Action

public class SyncExample {

private int counter = 0;

public synchronized void increment() {

counter++;

}

public static void main(String[] args) {

SyncExample obj = new SyncExample();

for (int i = 0; i < 1_000_000; i++) {

obj.increment();

}

}

}

- If run on a single thread → biased locking.

- If multiple threads lightly contend → lightweight locking.

- If contention is heavy → OS monitor.

Monitoring Locking Behavior

JFR + Mission Control

- Track lock contention.

- Identify hotspots where synchronization causes latency.

JVM Flags

-XX:+PrintSafepointStatistics

-XX:+PrintLocks

Real-World Case Studies

Case 1: Web Application

- Issue: High CPU due to lock contention.

- Diagnosis: Lightweight locks spinning under load.

- Solution: Replaced

synchronizedwithReentrantLock. - Result: Reduced CPU, improved throughput.

Case 2: Low-Latency Trading App

- Issue: Biased lock revocations causing safepoint pauses.

- Solution: Disabled biased locking (

-XX:-UseBiasedLocking). - Result: More consistent latency.

Pitfalls and Troubleshooting

- Biased Locking Overhead: Revocation at safepoint causes latency spikes.

- Spinning in CAS: Lightweight locks consume CPU under contention.

- OS Locking: Severe contention leads to kernel overhead.

- Monitoring Required: Without profiling, hard to know which lock state dominates.

Best Practices

- Avoid excessive synchronization on shared resources.

- Consider

java.util.concurrentclasses for better scalability. - Disable biased locking in highly concurrent workloads.

- Profile locking behavior before tuning.

- Minimize lock scope to reduce contention.

JVM Version Tracker

- Java 8: Biased locking default.

- Java 11: G1 GC default, synchronization stable.

- Java 15: Biased locking removed.

- Java 17+: Continued refinements in lightweight locking.

- Java 21+: Project Lilliput + smaller object headers optimize lock metadata.

Conclusion & Key Takeaways

- Biased Locking: Optimized for single-threaded workloads.

- Lightweight Locking: Efficient under low contention.

- Heavyweight Locking: Safe fallback under contention but expensive.

- Understanding synchronization internals helps developers tune concurrency and reduce latency in modern JVM applications.

FAQ

1. What is the JVM memory model and why does it matter?

It defines how threads see shared variables, ensuring synchronized blocks maintain visibility.

2. How does G1 GC differ from CMS?

G1 compacts memory into regions; CMS was prone to fragmentation.

3. When should I use ZGC or Shenandoah?

For low-latency workloads requiring minimal safepoint pauses.

4. What are JVM safepoints and why do they matter?

They pause threads for GC, JIT, or biased lock revocation.

5. How do I solve OutOfMemoryError in production?

Check GC logs, increase heap, and fix memory leaks.

6. What are the trade-offs of throughput vs latency tuning?

Throughput allows higher GC pauses; latency tuning minimizes them.

7. How do I read and interpret GC logs?

Look at GC frequency, pause times, and heap before/after.

8. How does JIT compilation optimize performance?

It compiles hot methods into native code, applying escape analysis and inlining.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller object headers improve memory and synchronization performance.

10. How does GC differ in microservices vs monoliths?

Microservices need predictable latency; monoliths often prioritize throughput.