In high-performance Java applications, developers often encounter unexpected pauses that affect throughput or latency. Many of these pauses are caused not by garbage collection alone, but by JVM safepoints.

A safepoint is a moment when the JVM halts all application threads at a well-defined location, allowing the JVM to perform critical operations like garbage collection, JIT compilation, or de-optimization. Safepoints are essential for JVM correctness but can have significant performance implications if not well understood.

This tutorial explains what safepoints are, why they exist, their impact on performance, and how to monitor and tune them.

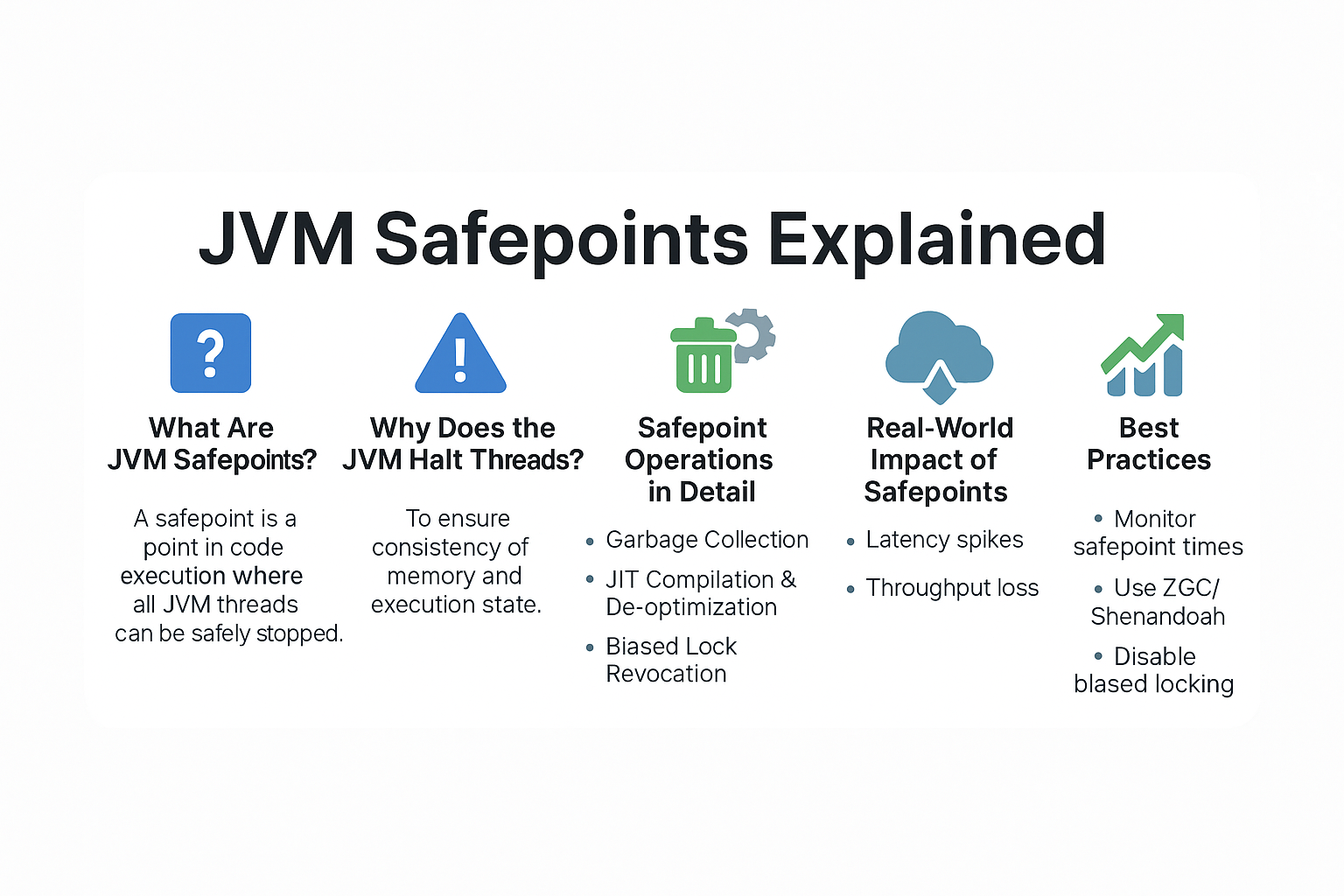

What Are JVM Safepoints?

- A safepoint is a point in code execution where all JVM threads can be safely stopped.

- At safepoints, the JVM can execute operations that require a consistent global state.

- Examples of safepoint operations:

- Garbage collection

- JIT compilation/de-optimization

- Biased lock revocation

- Class redefinition (via JVMTI/Instrumentation)

Why Does the JVM Halt Threads?

The JVM halts threads to ensure consistency of memory and execution state. For example:

- During garbage collection, the JVM must know which references are live.

- For de-optimization, the JVM must replace compiled code with interpreted code safely.

- For biased locking, it must adjust lock ownership without race conditions.

Analogy: Imagine stopping all cars at traffic lights before workers repaint road markings. Without the stop, chaos ensues.

How Safepoints Are Reached

- JVM inserts safepoint polls at specific bytecode locations (e.g., method calls, loops).

- When a safepoint is requested, each thread checks in at the next poll.

- Once all threads reach a safepoint, the JVM performs the operation.

Example Flags for Monitoring Safepoints

-XX:+PrintGCApplicationStoppedTime

-XX:+PrintSafepointStatistics -XX:PrintSafepointStatisticsCount=1

Safepoint Operations in Detail

1. Garbage Collection

- Requires halting threads to trace object graph safely.

- Stop-the-world pauses are safepoint pauses.

2. JIT Compilation & De-optimization

- Compiled code may be replaced or invalidated.

- Threads must stop to ensure consistent execution state.

3. Biased Lock Revocation

- Threads must pause to revoke a lock optimization.

4. Class Redefinition

- Dynamic reloading of classes (e.g., HotSwap in debugging) requires safepoints.

Real-World Impact of Safepoints

- Latency spikes: Safepoints cause millisecond to second-level pauses.

- Throughput loss: Frequent safepoints reduce CPU time for application code.

- Microservices impact: Small but frequent safepoints cause noisy neighbor effects in Kubernetes.

Case Study: Trading System

- Issue: Latency spikes during peak trading hours.

- Diagnosis: Frequent safepoints for biased lock revocation.

- Solution: Disabled biased locking (

-XX:-UseBiasedLocking). - Result: Reduced safepoint frequency, improved latency consistency.

Pitfalls and Troubleshooting

- Long Safepoint Times: Caused by slow thread response.

- High Frequency: Due to aggressive JIT or biased locking.

- Monitoring Gaps: Without safepoint logs, hard to diagnose.

- Misattribution: Often blamed on GC when safepoints are the real cause.

Best Practices

- Always monitor safepoint times in latency-sensitive apps.

- Use modern collectors (ZGC, Shenandoah) that minimize safepoint pauses.

- Avoid excessive class redefinition in production.

- Disable biased locking for highly concurrent workloads.

- Profile safepoints with JFR + Mission Control.

JVM Version Tracker

- Java 8: Safepoint bias-lock issues common.

- Java 11: G1 default; improved safepoint logging.

- Java 17: ZGC/Shenandoah minimize safepoint impact.

- Java 21+: NUMA-aware GC and Project Lilliput refine safepoint behavior.

Conclusion & Key Takeaways

- Safepoints are essential for JVM correctness, ensuring a consistent global state.

- They introduce pauses that can affect performance in latency-sensitive environments.

- Monitoring and tuning safepoints is as important as GC tuning.

- Modern JVMs reduce safepoint impact, but understanding them is key for optimization.

FAQ

1. What is the JVM memory model and why does it matter?

It defines how threads see memory; safepoints ensure this consistency during GC/JIT.

2. How does G1 GC differ from CMS?

G1 compacts regions, CMS could fragment memory.

3. When should I use ZGC or Shenandoah?

When minimizing safepoint pauses is critical.

4. What are JVM safepoints and why do they matter?

Points where threads pause so the JVM can run GC/JIT operations safely.

5. How do I solve OutOfMemoryError in production?

Check GC logs, safepoint logs, and fix leaks or tune heap.

6. What are the trade-offs of throughput vs latency tuning?

Throughput may tolerate longer safepoints; latency-sensitive apps cannot.

7. How do I read and interpret GC logs?

Look for stop-the-world times and correlate with safepoints.

8. How does JIT compilation optimize performance?

It compiles hot methods to native code, requiring safepoints for consistency.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller object headers will reduce GC and safepoint costs.

10. How does GC differ in microservices vs monoliths?

Microservices need predictable latency; safepoints affect SLAs more severely.