The HotSpot JVM has long been the workhorse for Java execution, using the C1 and C2 JIT compilers to balance startup speed and peak performance. However, as modern applications demand lower latency, higher throughput, and polyglot capabilities, the JVM ecosystem has evolved to embrace GraalVM.

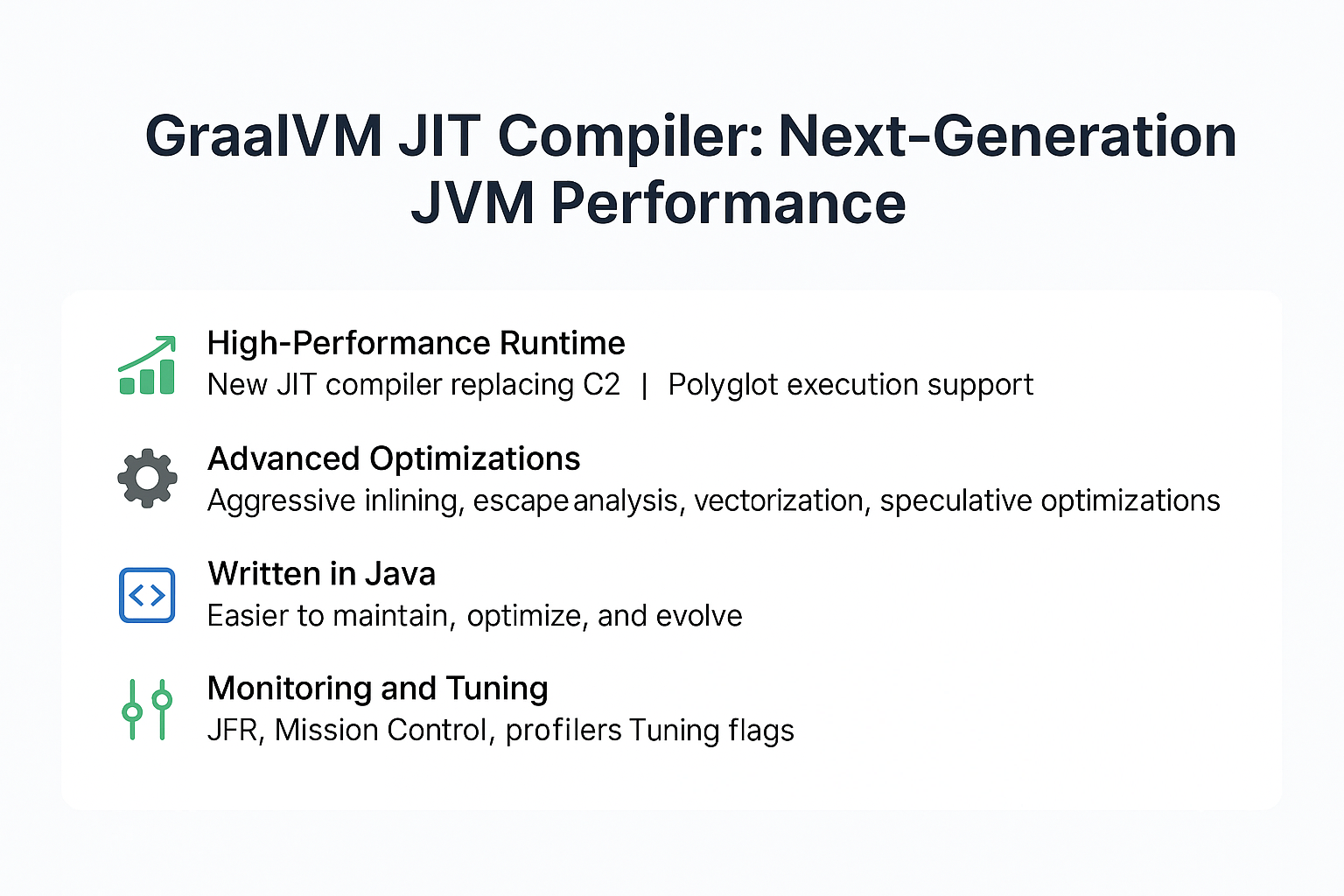

GraalVM introduces a next-generation JIT compiler written in Java itself, delivering faster optimizations, advanced inlining, and cross-language execution. It’s designed not only to accelerate Java but also to enable seamless interoperability across languages like JavaScript, Python, and Ruby.

This tutorial explores how the GraalVM JIT compiler works, how it improves over traditional HotSpot JIT, and how to leverage it in real-world systems.

What is GraalVM?

- A high-performance runtime for JVM languages (Java, Kotlin, Scala).

- Includes a new JIT compiler replacing C2.

- Supports polyglot execution—running Java, JavaScript, Python, and more together.

- Provides both JIT and Ahead-of-Time (AOT) compilation.

Analogy: If HotSpot JIT is a sports car, GraalVM JIT is a hybrid supercar—faster, smarter, and adaptable across different terrains (languages).

How GraalVM JIT Works

- Written in Java: Easier to maintain, optimize, and evolve.

- Replaces C2 compiler: Provides advanced optimizations.

- Dynamic profiling: Collects runtime data for adaptive optimization.

- De-optimization: Falls back to interpreter if assumptions break.

Key Optimizations

- Aggressive Inlining → Deeper method inlining than C2.

- Escape Analysis Improvements → More objects allocated on stack.

- Vectorization → Optimizes loops for SIMD instructions.

- Partial Escape Analysis → Enables more efficient object allocation.

- Speculative Optimizations → Removes branches based on runtime profiling.

Comparing HotSpot C2 vs GraalVM JIT

| Feature | HotSpot C2 | GraalVM JIT |

|---|---|---|

| Language | C++ | Java |

| Optimizations | Strong | More aggressive |

| Polyglot Support | No | Yes (Java, JS, Python, etc.) |

| Maintenance | Complex | Easier (Java-based) |

| AOT Support | Limited | Full (via native-image) |

Using GraalVM JIT

Enabling Graal JIT in JVM

java -XX:+UnlockExperimentalVMOptions -XX:+UseJVMCICompiler -jar MyApp.jar

Using GraalVM Distribution

gu install native-image

java -XX:+UseJVMCICompiler -jar MyApp.jar

Ahead-of-Time Compilation

native-image -jar MyApp.jar

./myapp

Monitoring and Tuning Graal JIT

- JFR + Mission Control: Monitor compilation activity.

-XX:+PrintCompilation: View compiled methods.-XX:+UseJVMCICompiler: Enables Graal as default compiler.- Profilers (VisualVM, async-profiler): Measure CPU, allocations, and GC pressure.

Real-World Case Studies

Case 1: Microservices Startup Latency

- Issue: Slow startup with C2.

- Solution: Graal JIT reduced warm-up time with faster optimizations.

- Result: 30% improvement in response latency.

Case 2: Data Processing Job

- Issue: Heavy CPU-bound workload.

- Solution: Graal JIT optimized vectorized loops.

- Result: 20% throughput gain.

Case 3: Polyglot Application

- Issue: Running Java + JS in the same runtime.

- Solution: Graal polyglot execution avoided costly interop overhead.

- Result: Faster cross-language calls and lower memory footprint.

Pitfalls and Troubleshooting

- Experimental Flags: Graal JIT may require enabling JVMCI (JVM Compiler Interface).

- Warm-up Overhead: Initial profiling may still introduce latency.

- AOT vs JIT Trade-off: AOT reduces warm-up but may sacrifice peak performance.

- Compatibility: Some frameworks may require configuration changes.

Best Practices

- Use Graal JIT for long-running, CPU-intensive apps.

- Benchmark against HotSpot C2 before production rollout.

- Use AOT (

native-image) for CLI tools or serverless functions. - Monitor with JFR and profiling tools.

- Keep GraalVM updated—optimizations improve rapidly.

JVM Version Tracker

- Java 8: C2 JIT dominant. Graal available via JVMCI.

- Java 11: G1 GC default; Graal JIT experimental but stable.

- Java 17: Graal JIT widely adopted in production.

- Java 21+: Graal JIT + Project Lilliput integration, smaller object headers, NUMA-aware optimizations.

Conclusion & Key Takeaways

- GraalVM JIT is the next evolution of Java performance tuning.

- Replaces C2 with a Java-based compiler, easier to extend.

- Offers advanced optimizations, faster warm-up, and polyglot execution.

- Works alongside GC tuning and profiling for production-ready systems.

By adopting GraalVM JIT, organizations can unlock cutting-edge performance and cross-language flexibility in modern JVM environments.

FAQ

1. What is the JVM memory model and why does it matter?

It ensures correctness for JIT-compiled code, preventing race conditions.

2. How does G1 GC differ from CMS?

G1 uses regions with compaction; CMS fragmented old gen.

3. When should I use ZGC or Shenandoah?

For ultra-low-latency and very large heaps.

4. What are JVM safepoints and why do they matter?

They allow GC and JIT to pause threads safely.

5. How do I solve OutOfMemoryError in production?

Use heap dumps, tune -Xmx, and check for leaks.

6. What are the trade-offs of throughput vs latency tuning?

Throughput maximizes efficiency; latency ensures predictability.

7. How do I read and interpret GC logs?

Focus on pause times, heap usage, and frequency.

8. How does JIT compilation optimize performance?

By compiling hot methods into native code, removing interpretation overhead.

9. What’s the future of GC in Java (Project Lilliput)?

Smaller object headers improve memory efficiency for GC and JIT.

10. How does GC differ in microservices vs monoliths?

Microservices need predictable latency, monoliths emphasize throughput.