Introduction

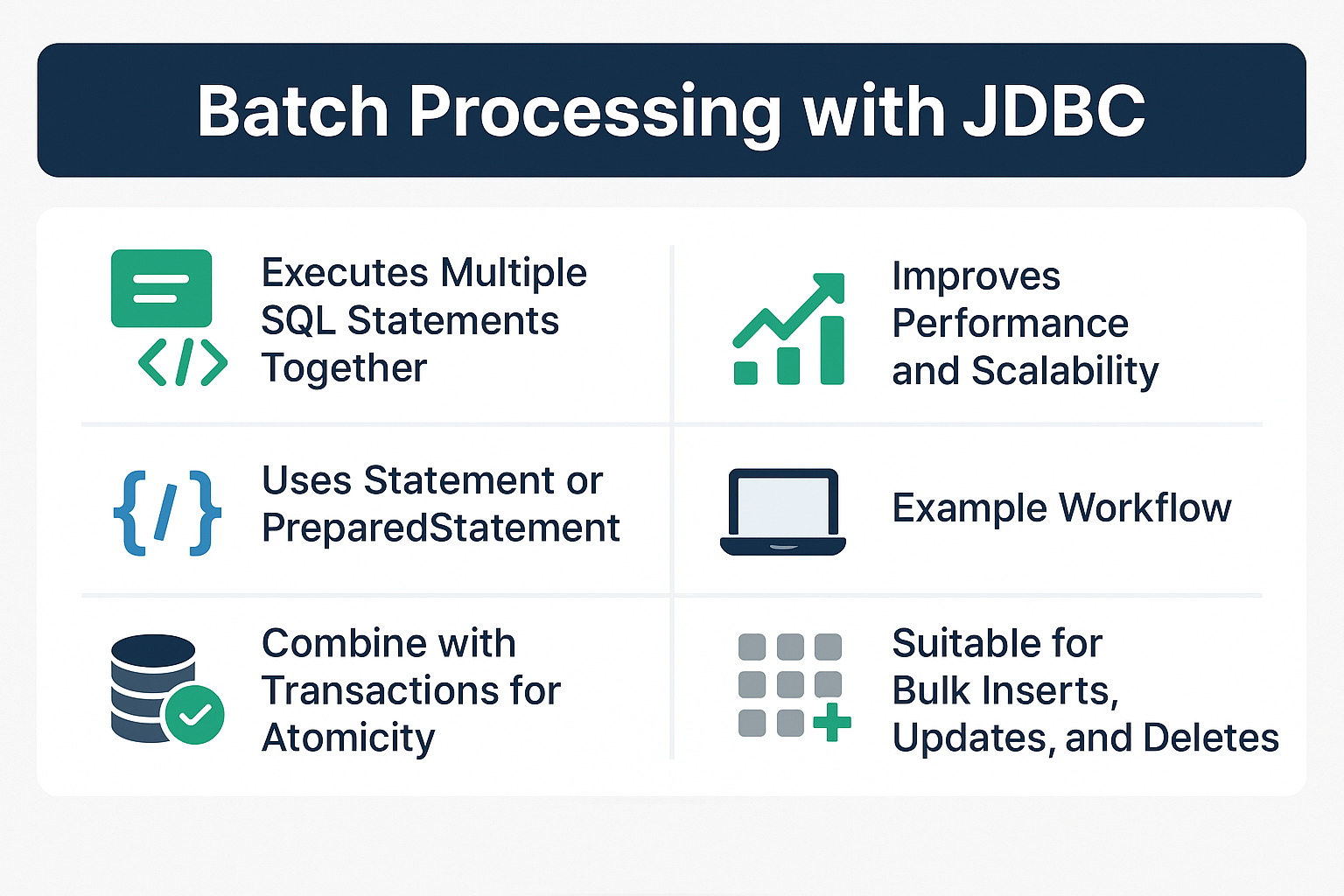

Batch processing in JDBC allows multiple SQL statements to be executed in a single database call. This reduces network overhead, improves performance, and is essential for applications dealing with large data sets.

Why Batch Processing Matters

- Performance: Minimizes round trips to the database.

- Scalability: Handles large data operations efficiently.

- Atomic Operations: Can be combined with transactions for reliability.

Core Concepts of JDBC Batch Processing

- Batch: A group of SQL statements executed together.

- Statement vs PreparedStatement: Both can be used, but PreparedStatement offers better performance and security.

- Transactions: Often combined with batch processing for consistency.

Batch Processing Workflow

Java App → Connection → Statement/PreparedStatement → addBatch() → executeBatch() → Database

Real-World Use Cases

- Bulk Inserts: Importing CSV data into a database.

- Mass Updates: Updating product prices in e-commerce systems.

- Log Processing: Writing large volumes of logs to a database.

- Data Migration: Moving records between databases.

Implementing Batch Processing

Using Statement

import java.sql.*;

public class StatementBatchExample {

public static void main(String[] args) {

String url = "jdbc:mysql://localhost:3306/testdb";

String user = "root";

String password = "password";

try (Connection conn = DriverManager.getConnection(url, user, password);

Statement stmt = conn.createStatement()) {

conn.setAutoCommit(false);

stmt.addBatch("INSERT INTO employees (name, role) VALUES ('Alice', 'Developer')");

stmt.addBatch("INSERT INTO employees (name, role) VALUES ('Bob', 'Manager')");

stmt.addBatch("UPDATE employees SET role='Lead' WHERE name='Alice'");

int[] result = stmt.executeBatch();

conn.commit();

System.out.println("Batch executed: " + result.length + " statements.");

} catch (SQLException e) {

e.printStackTrace();

}

}

}

Using PreparedStatement

String sql = "INSERT INTO employees (name, role) VALUES (?, ?)";

try (PreparedStatement ps = conn.prepareStatement(sql)) {

conn.setAutoCommit(false);

ps.setString(1, "John");

ps.setString(2, "Engineer");

ps.addBatch();

ps.setString(1, "Emma");

ps.setString(2, "Analyst");

ps.addBatch();

int[] result = ps.executeBatch();

conn.commit();

System.out.println("PreparedStatement batch executed: " + result.length + " statements.");

}

Statement vs PreparedStatement for Batch Processing

| Feature | Statement | PreparedStatement |

|---|---|---|

| SQL Injection Safety | Vulnerable | Safe with parameter binding |

| Performance | Re-parsed each time | Precompiled, faster on reuse |

| Dynamic Parameters | Hardcoded | Uses ? placeholders |

| Best Use | Simple static queries | Dynamic or repeated queries |

Common Mistakes and Anti-Patterns

- Not using transactions: Can lead to partial updates on failure.

- Mixing large and small queries: Reduces batch efficiency.

- Forgetting to clearBatch(): May lead to duplicate executions.

- Ignoring batch size: Extremely large batches can exhaust memory.

Security Implications

- Always use PreparedStatement for user input queries.

- Combine with transactions to avoid data inconsistency.

- Validate data before adding to batch.

Performance and Scalability

- Use batching for thousands of inserts/updates.

- Combine with connection pooling for high-performance systems.

- Tune batch sizes (e.g., 500–1000 statements per batch) for optimal memory and speed.

Best Practices

- Always use try-with-resources.

- Disable auto-commit during batch execution.

- Use PreparedStatement for dynamic queries.

- Test different batch sizes for your specific database.

Real-World Analogy

Think of batch processing like sending a package of letters instead of individual letters. Instead of going to the post office for every letter (query), you bundle them and send them at once, saving time and effort.

Conclusion & Key Takeaways

- Batch processing improves JDBC performance significantly.

- Use PreparedStatement with parameterized queries for secure batching.

- Combine with transactions and pooling for scalable, reliable systems.

FAQ

-

What is batch processing in JDBC?

Executing multiple SQL statements in a single database call. -

Why use batch processing?

To reduce network overhead and improve performance for bulk operations. -

Can I mix SELECT queries in a batch?

No, batches are typically used for INSERT, UPDATE, and DELETE. -

Does batch processing work with all databases?

Yes, supported by all major RDBMS via JDBC. -

What is the difference between executeBatch and executeUpdate?

executeBatch()runs multiple statements,executeUpdate()runs one. -

How to handle failures in batch processing?

Use transactions and rollback on failure. -

What is the optimal batch size?

Depends on the database; typically 500–1000 statements. -

Is PreparedStatement faster for batch processing?

Yes, due to precompilation and parameter binding. -

Can I use batch processing with connection pooling?

Yes, it's recommended for production systems. -

Does batch processing prevent SQL injection?

Only if you use PreparedStatement with parameterized queries.